Ops Center Analyzer Detail View Manual Logs Collection

Objective

Provide procedure to collect Ops Center Detail View logs collection.

Environment

- Ops Center Analyzer Detail View 10.x

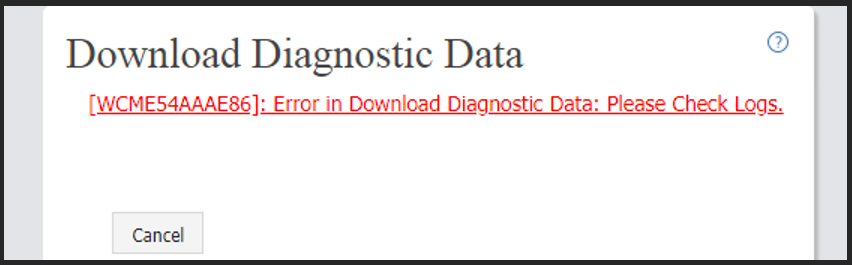

- On instances where diagnostic logs for the same is not downloadable due to below WCME54AAAE86 error:

Note: Please first attempt collecting logs for Analyzer, Analyzer Detailview, and Probe using below procedures:

Procedure

Troubleshooting Analyzer Detail View logs collection can be attempted by following the below link:

Troubleshoot Cannot Download Ops Center Analyzer Detail View Logs

Please perform the below manual logs collection procedure if Analyzer Detail View diagnostic logs collection is still not downloadable.

Other alternative log collection is to use Linux HDCA getconfig (UNIX Getconfig Version 7.64).

1. Please zip up the contents of Analyzer Detail View director /usr/local/megha/logs/ and upload resultant files to TUF.

2. Please provide output of command

• systemctl status firewalld (if OS is RHEL)

3. Output of commands from HDCA Server

• find /data/megha -type d -user root

• find /data/megha -type f -user root

4. Output of commands from Analytics probe server

• find /home/megha -type d -user root

• find /home/megha -type f -user root

5. Output of command : nc –vz <Analytics probe server| HDCA Server IP address> 80

6. Check if any large size m.txt file exists and share it using the following steps:

a. SSH to HDCA Server with ‘root’ user.

b. Run the following command to check the file size. The first column in output represents the size of the file.

Command: ls -lSch /usr/local/megha/db/conf/data/*/m.txt | awk '{print $5,$9}' | grep G

Sample Output:

[root@mc u1]# ls -lSch /usr/local/megha/db/conf/data/*/m.txt | awk '{print $5,$9}' | grep G

5.1G /usr/local/megha/db/conf/data/dfPort/m.txt

24K /usr/local/megha/db/conf/data/dfHG/m.txt

4.0K /usr/local/megha/db/conf/data/__sd_GarbageCollector/m.txt

4.0K /usr/local/megha/db/conf/data/__sd_LastGcInfo/m.txt

c. If any file size is greater than 4GB in above output, then create the tar archive and share the tar file along with above command output.

Command: tar -zcvf <filename>.tar.gz <complete path of m.txt file>

Example: tar -zcvf dfPort.tar.gz /usr/local/megha/db/conf/data/dfPort/m.txt

The dfPort.tar.gz output file will be generated in the current directory.

7. If you are unable to access HDCA Server using REST API, then execute curl command from Analytics probe server CLI and share output:

curl 'https://<HDCA_Server_IP_ADDRESS>:8443/dbapi.do?action=query&dataset=defaultDs&processSync=true' --insecure -u <HDCA_user>:<password> -H "Content-Type: application/json" -k1 -X POST -d '{"query":"raidStorage[=name rx .*]","startTime":"YYYYMMDD_HHMMSS","endTime":" YYYYMMDD_HHMMSS"}'

Example:

curl 'https://xxx.xxx.xxx.xxx:8443/dbapi.d...ocessSync=true' --insecure - u admin:Test@123 -H "Content-Type: application/json" -k1 -X POST -d '{"query":"raidStorage[=name rx .*]","startTime":"20171106_000000","endTime":"20171106_230000"}'

Additional Notes